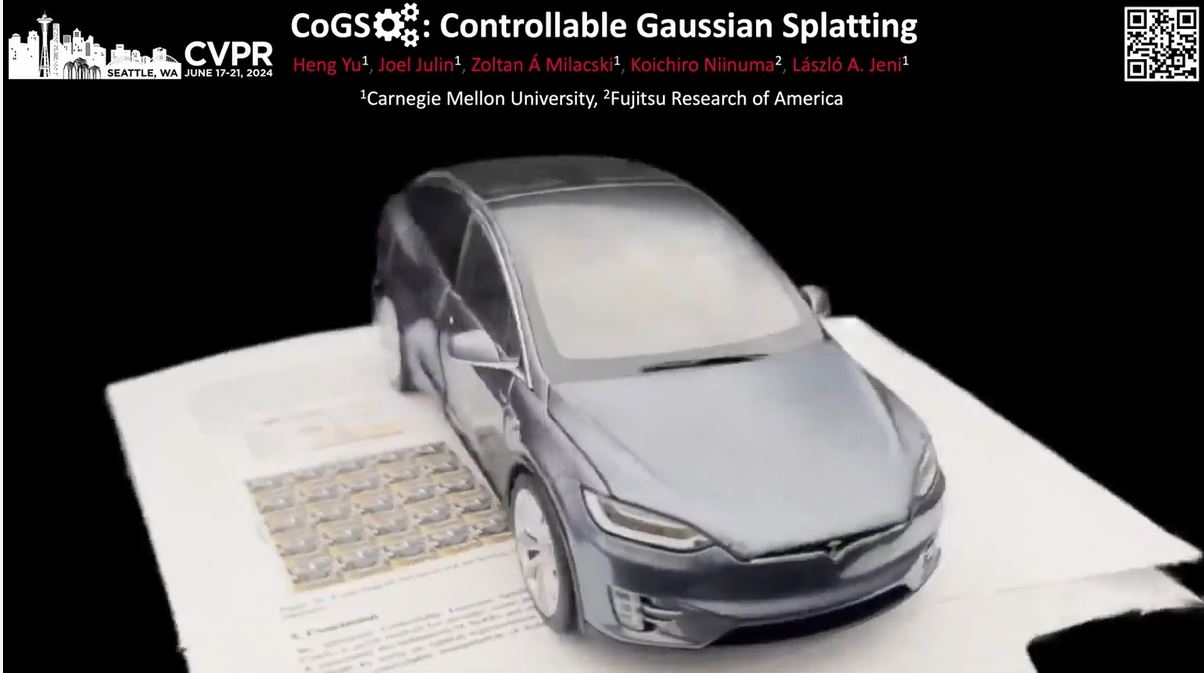

CoGS: Revolutionizing 3D Structure Reanimation with Controllable Gaussian Splatting

By Ashlyn Lacovara

Capturing and reanimating deformable 3D structures is crucial for advancing virtual and augmented reality experiences. Laszlo Jeni, an affiliated faculty member at the Extended Reality Technology Center, is collaborating with Fujitsu on a project called CoGS: Controllable Gaussian Splatting. This method focuses on capturing and reanimating 3D deformable structures using a single monocular camera, achieving 3D reconstruction accuracy comparable to what previously required large multi-camera systems.

Traditional methods for capturing and reanimating 3D structures often involve complex, resource-intensive setups. Techniques like Neural Radiance Fields (NeRFs) have made significant strides in high-fidelity scene representation but are primarily focused on static scenes. Extending NeRFs to dynamic scenarios introduces additional complexities, making them less practical for real-time applications.

3D Gaussian Splatting has emerged as an alternative, but existing methods require synchronized multi-view cameras and lack dynamic controllability. This is where CoGS stands out, addressing these limitations by enabling direct manipulation of scene elements in real-time without the need for pre-computed control signals.

CoGS leverages the concept of Gaussian Splatting, which involves distributing Gaussian functions, mathematical functions with characteristic bell-shaped curves, over a set of data points or a surface. This technique is widely used in computer graphics to create smooth transitions and effects. However, the real advancements come with the "controllable" aspect of CoGS, which allows for dynamic adjustment of the Gaussian parameters such as position, width, and amplitude.

Key Benefits:

- Simplified Setup: Unlike traditional 3D dynamic Gaussian methods that require complex, synchronized multi-view camera setups, CoGS operates efficiently with a monocular camera.

- Enhanced Controllability: CoGS allows for real-time control of scene elements, making it possible to directly manipulate dynamic scenarios without the need for pre-computed control signals. This flexibility is crucial for applications in virtual reality, augmented reality, and interactive media.

- Superior Performance: In evaluations using both synthetic and real-world datasets, CoGS consistently outperformed existing dynamic and controllable neural representations in terms of visual fidelity and real-time responsiveness.

CoGS builds upon the foundational principles of Gaussian Splatting by incorporating a differentiable rasterization pipeline. Each 3D Gaussian is defined by a covariance matrix, position, opacity, and color. By projecting these 3D Gaussians into 2D, CoGS achieves efficient rendering and integration into dynamic scenes.

The process begins with the initialization of 3D Gaussians from a point-cloud, followed by a stabilization phase focused on static elements. Subsequent phases involve updating parameters dynamically through deformation networks, ensuring geometric consistency and high-quality scene reconstruction over time.

In conclusion, CoGS represents a significant advancement in the field of 3D structure reanimation. By addressing the limitations of traditional methods and offering real-time, controllable manipulation of dynamic scenes, CoGS paves the way for more immersive and interactive XR experiences. As research and development continue, the potential applications of this technology are vast, looking to revolutionize the way we interact with digital environments.

If you would like to know more about this research it will be presented at CVPR 2024 in June. Please see the entire paper here: Link

Researchers: